Reinforcement Learning Drone Racing

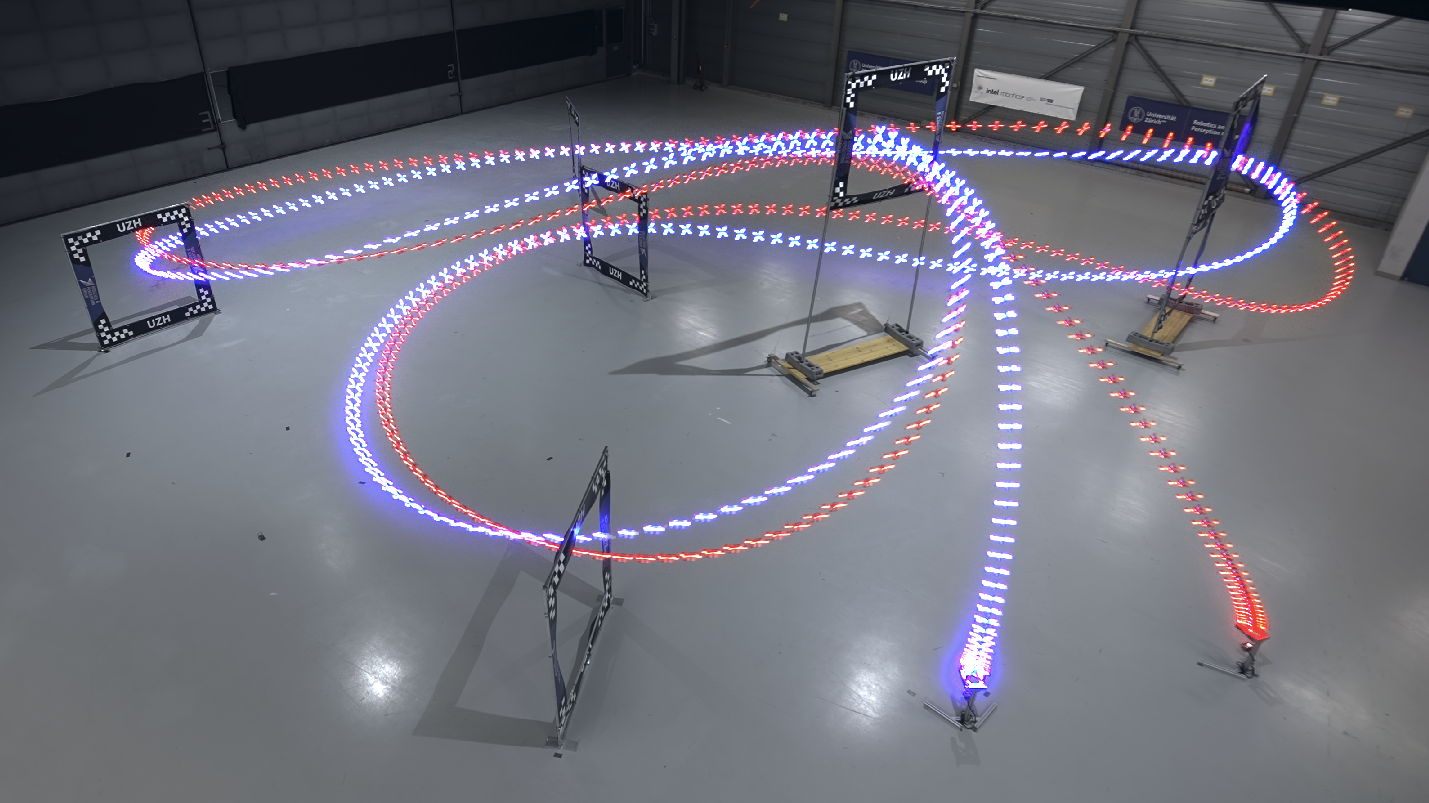

I developed an end-to-end reinforcement learning pipeline that trains a quadrotor to race through sequential gates using a custom Proximal Policy Optimization (PPO) implementation in NVIDIA Isaac Sim. This involved writing PPO from scratch, designing a compact but expressive reward function, building a gate-relative observation space, and implementing a domain-randomized reset strategy to enable stable, fast, and directionally-aware flight. By randomizing dynamics parameters (thrust-to-weight, drag, and inner-loop gains), the policy learns behaviors that generalize for sim-to-real transfer. The resulting controller achieves smooth, consistent, and collision-free multi-lap performance.

Highlights

- Implemented PPO from first principles with clipped objective, GAE, and adaptive KL-penalty.

- Achieved an average lap time of 5.72 seconds with no crashes.

- Designed dense + sparse rewards for progress, heading alignment, and accurate gate-pass detection.

- Built a gate-frame observation space for geometric awareness and stable high-speed navigation.

- Added domain randomization to strengthen sim-to-real transfer.

📽️ Watch the drone in action!